1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

|

from bs4 import BeautifulSoup

import requests

import re as reg

from selenium import webdriver

import os

from multiprocessing import Pool

from selenium.webdriver.chrome.options import Options

def get_chapter():

try:

src='https://www.manhuagui.com/comic/19430/'

header={"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"}

re=requests.get(url=src,headers=header)

html=re.text

print(re.status_code)

soup=BeautifulSoup(html,'lxml')

haha=soup.find_all(class_="chapter-list cf mt10")[0]

for i in haha.find_all(name='li'):

chapter=i.a['href']

chapterTitle=i.a['title']

yield{

'chapter':chapter,

'title':chapterTitle

}

except:

print('获取章节地址出错')

def get_detail(url,title):

src='https://www.manhuagui.com/'+url

header={"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"}

try:

re=requests.get(url=src,headers=header)

html=re.text

soup=BeautifulSoup(html,'lxml')

headerTitle=soup.find_all(class_="w980 title")[0]

page=headerTitle.find_all(name='span')[1]

dd=reg.findall(r"\d+",page.text)[0]

num=int(dd)

for i in range(1,num+1):

print()

url=src+'#p='+str(i)

get_img(url,title)

except:

print("获取图片失败")

def get_img(url,title):

try:

print(url)

chrome_options=Options()

chrome_options.add_argument('--headless')

bro = webdriver.Chrome(chrome_options=chrome_options)

bro.get(url)

html=bro.page_source

bro.close()

soup=BeautifulSoup(html,'lxml')

src=soup.find(id="mangaFile")['src']

save_img(src,title)

except:

print("获取真实地址失败")

bigbig=0

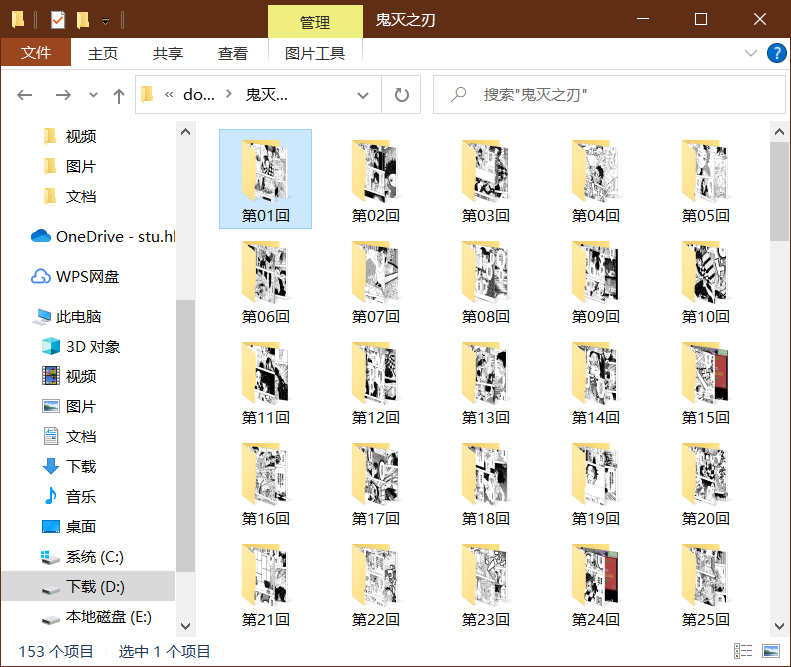

def save_img(src,title):

try:

global bigbig

path='D:\download\鬼灭之刃\{}'.format(title)

if not os.path.exists(path):

os.makedirs('D:\download\鬼灭之刃\{}'.format(title))

path='{}\{}.jpeg'.format(path,bigbig)

bigbig=bigbig+1

header={

"accept": "image/avif,image/webp,image/apng,image/*,*/*;q=0.8",

"accept-encoding": "gzip, deflate, br",

"accept-language": "zh-CN,zh;q=0.9,en-US;q=0.8,en;q=0.7",

"dnt": "1",

"referer": "https://www.manhuagui.com/",

"sec-fetch-dest": "image",

"sec-fetch-mode": "no-cors",

"sec-fetch-site": "cross-site",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"

}

print(src)

re=requests.get(url=src,headers=header)

content=re.content

with open(path,'wb') as haha:

haha.write(content)

print('ok!!!!')

except:

print("保存到文件时出错")

booksrc=[]

for i in get_chapter():

booksrc.append(i)

def main(i):

src=i.get('chapter')

title=i.get('title')

print('正在获取{} {}'.format(title,src))

get_detail(src,title)

if __name__=='__main__':

pool=Pool(processes=4)

group=booksrc

print(group)

pool.map(main,group)

|